Unlock the potential of personalized artificial intelligence with groundbreaking on-device training techniques. Discover how MIT researchers, in collaboration with the MIT-IBM Watson AI Lab, have pioneered PockEngine—an innovative method revolutionizing deep-learning models.

Personalized deep-learning models, powered by PockEngine, usher in a new era of adaptability. Picture AI chatbots seamlessly understanding diverse accents and smart keyboards intuitively predicting your next word based on your unique typing history. Unlike traditional approaches, PockEngine makes constant fine-tuning efficient by identifying specific parts of a machine-learning model that require updates.

Traditionally, achieving such customization involved transmitting user data to cloud servers, raising energy consumption concerns and security risks. PockEngine eliminates these hurdles by enabling on-device training, empowering edge devices like smartphones with the capability to adapt without compromising user privacy.

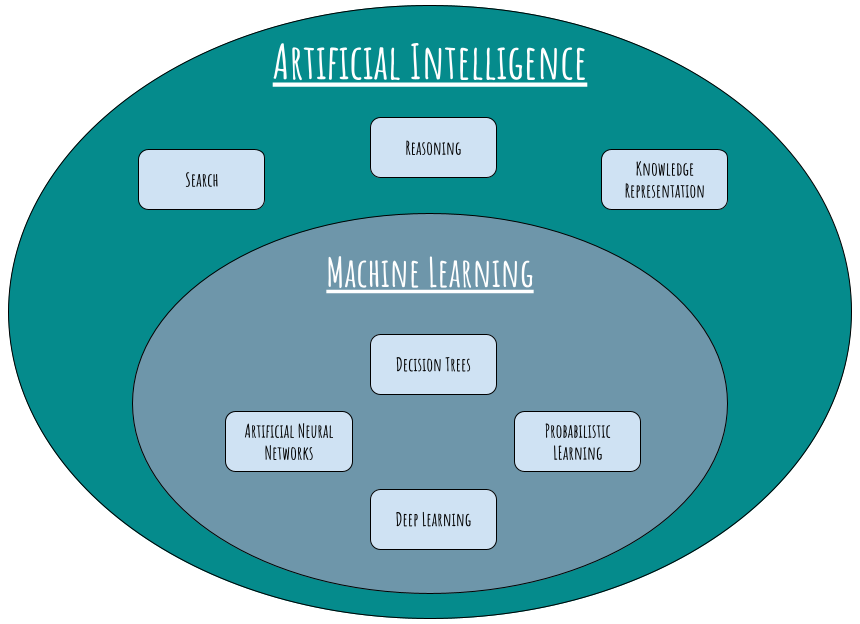

How does PockEngine achieve this feat? By strategically determining which components of a massive machine-learning model need updates, it minimizes computational overhead. The majority of computations are performed during the model preparation phase, optimizing the fine-tuning process's speed.

Say goodbye to the limitations imposed by cloud-dependent updates and embrace the future of on-device training. PockEngine is not just a solution; it's a paradigm shift, putting the power of personalization in the palm of your hand while safeguarding your data and the environment. Experience the next frontier in AI—intelligent, adaptive, and secure.